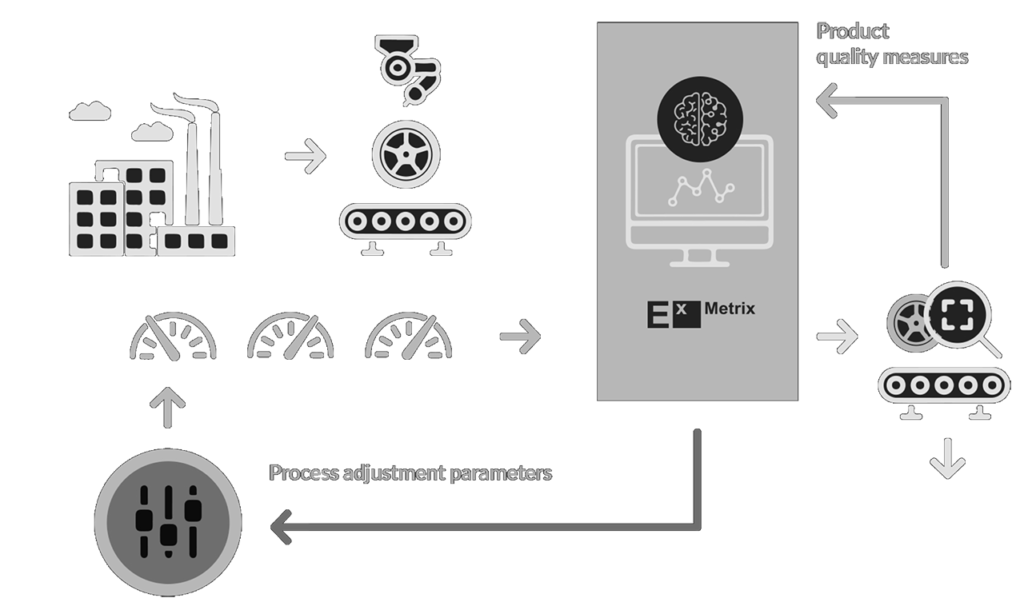

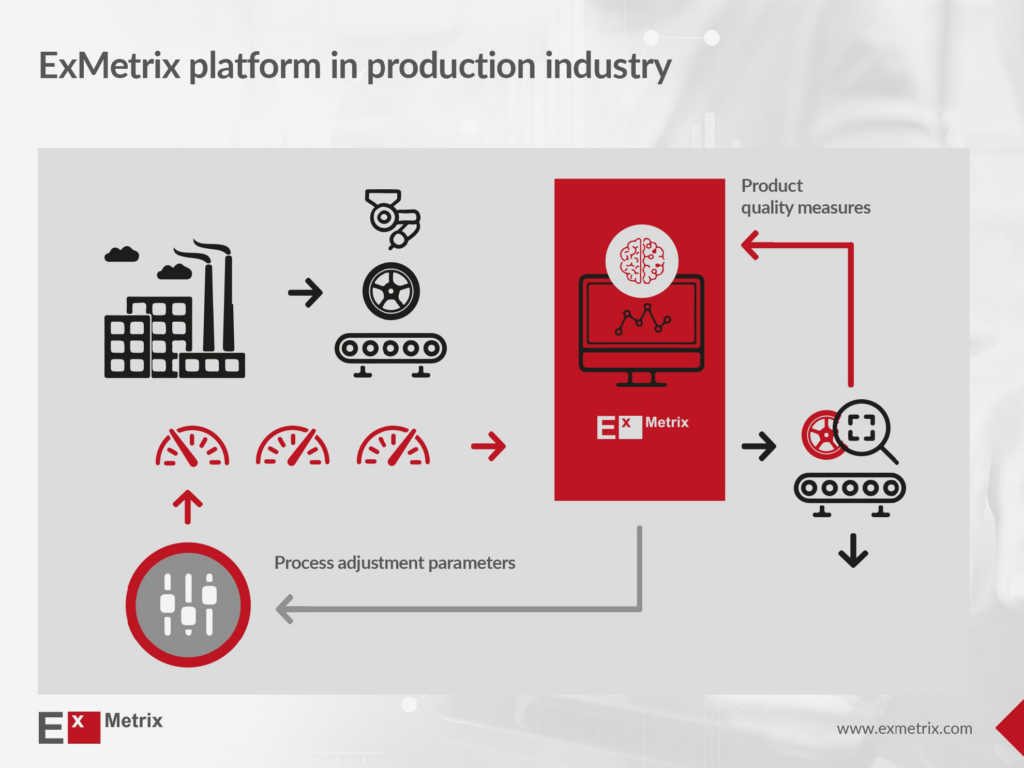

ExMetrix offers state-of-the-art solutions for optimizing production processes across your entire factory by integrating advanced Machine Learning and Artificial Intelligence technologies. Our platform meticulously analyzes a wide array of parameters, including both controllable factors and fixed conditions like current air temperature. By combining our extensive repository of over 50 million data series with your unique production data, ExMetrix builds precise AI-driven models to identify the optimal settings for all your production lines. This comprehensive approach enables not just individual production line improvements but also holistic factory-wide optimization. Whether it’s adjusting machine parameters, selecting the proportions of mixed ingredients, or controlling environmental conditions, ExMetrix helps you achieve superior product quality and operational efficiency. Discover how ExMetrix can elevate your entire factory’s production optimization efforts, leading to significant cost savings, enhanced productivity, and increased competitiveness in the market.

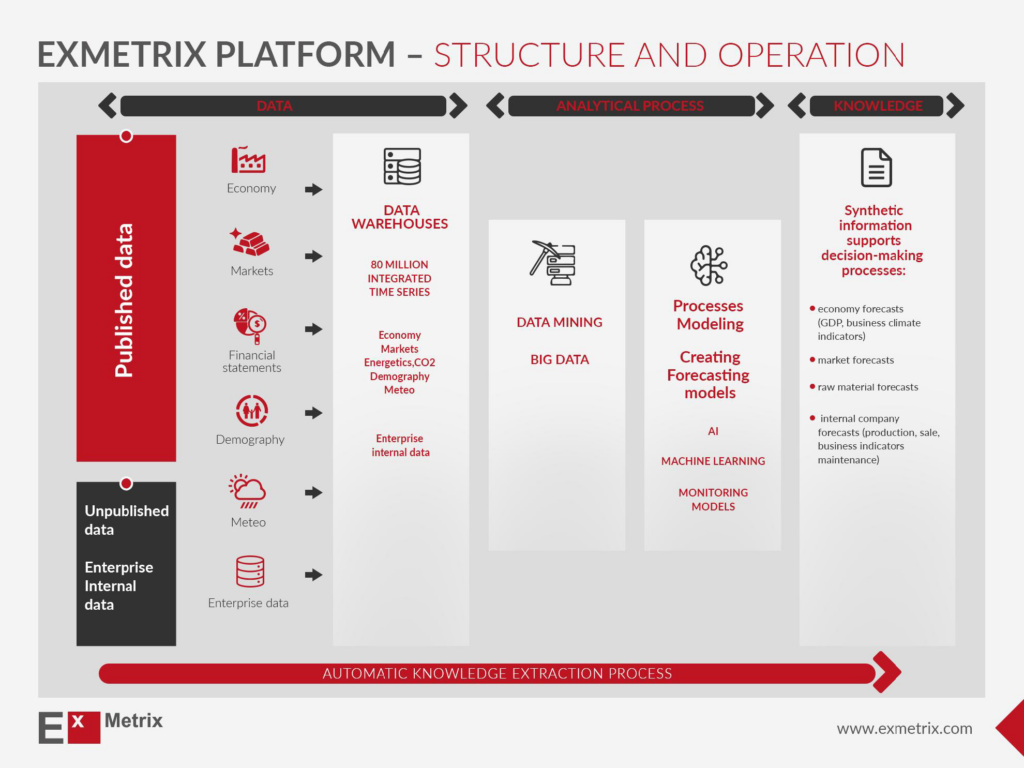

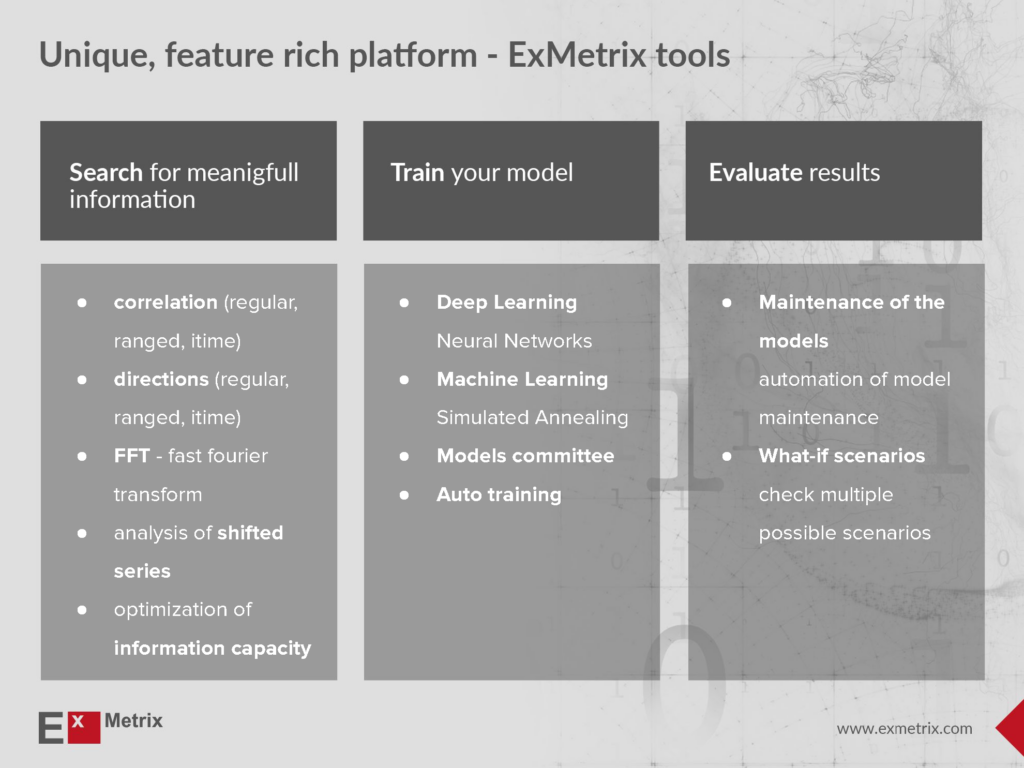

We put 20 years of experience in prediction models and optimisation into unique, SaaS product which:

- connects internal business data with rich industry and economical databases,

- enables to build unique AI models solving real problems,

- shortens entire modeling process from months to hours,

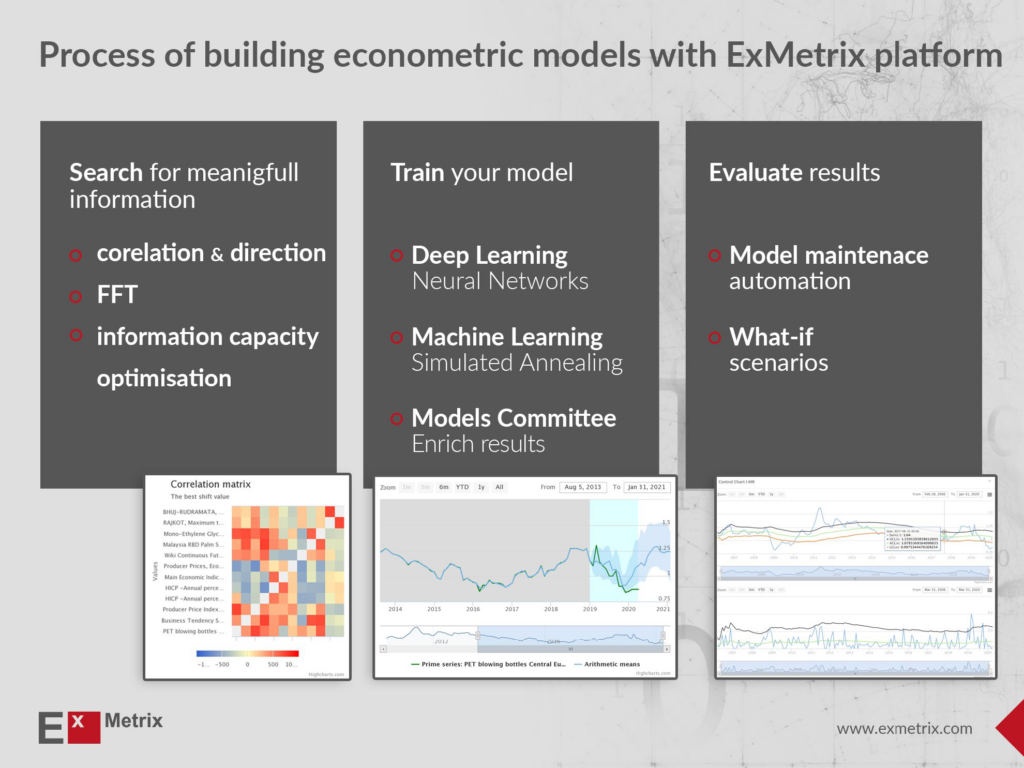

Searching data in accordance with indicated parameters

Tools for exploring databases allow fast and effective identification of data the user is interested in among hundreds of thousands available within integrated databases. Parameters, such as: name, time period are entered flexibly depending on the needs and search complexity.

Reshaping and data enhancement

Reshaping and data enhancement is aimed at achieving the form which is the best for building and optimizing models of analysed phenomena.

Features performed as part of reshaping and data enhancement:

- Automatic changes of time intervals – used depending on whether the user is building models and analyses using daily, monthly, quarterly or other data.

- Completing missing data

- Data verification

- Functional transformation – so that the data is adopted by models in the easiest way

Analysing a single time series

Features performed as part of analysing a single time series:

- Calculating statistics, histograms and frequency distributions of time series

- Spectral analysis of time series – allows obtaining information about time cycles related to time series

- Decomposition of time series into:

- Trend component – responsible for long-term changes of time series

- Seasonal component – responsible for short or medium-term cyclical changes

Analysis of correlations between pieces of data

Analysis of correlations is usually aimed at selecting a group of data that influences analysed process of phenomenon. Based on selected data, a mathematical model is created.

If the model is for forecast purposes, one could say that the forecast is created based on data selected during analysis of correlations.

Features performed as part of analysis of correlations between pieces of data:

- Search for data set related to analysed phenomenon

- Searching for parallels at spectral components level. Sifting through database in order to find pieces of data, which behave in a similar fashion in specified time frames, for example, pieces of data correlated to each other at daily or monthly changes level

- Optimizing information capacity of the selected data set. After optimization, the data set should:

- Describe analysed phenomenon as well as possible

- Not include excess (too similar to each other) pieces of data – replication of nearly the same pieces of data does not contribute to the data set, and causes unnecessary growth of the mathematical model as well as increase in time needed for optimization

Building and optimizing mathematical models

- Data sets obtained through correlation analysis are used during building and optimizing models.

- Models may only be in a describing nature, or may lead to a forecast for analysed phenomenon.

- It is possible to define different objective functions during the optimization process. For example the user wishes to receive the model with minimised forecast error or maximised correlation coefficient.

- After current data streaming into optimized models, they serve as a source of knowledge or forecast.